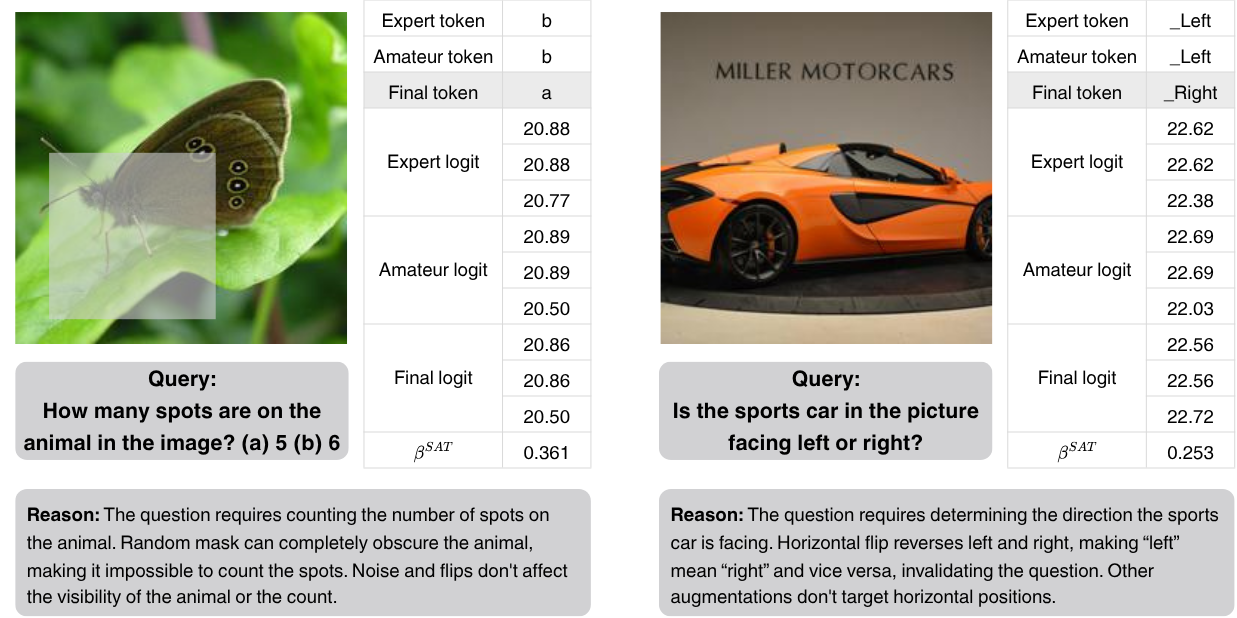

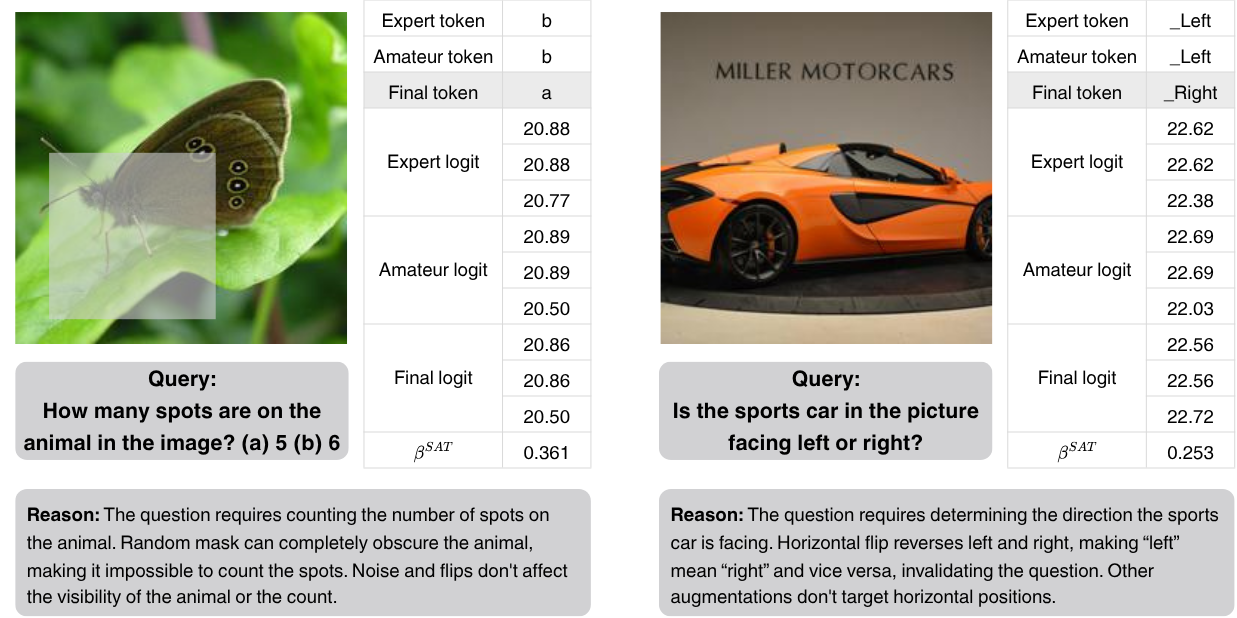

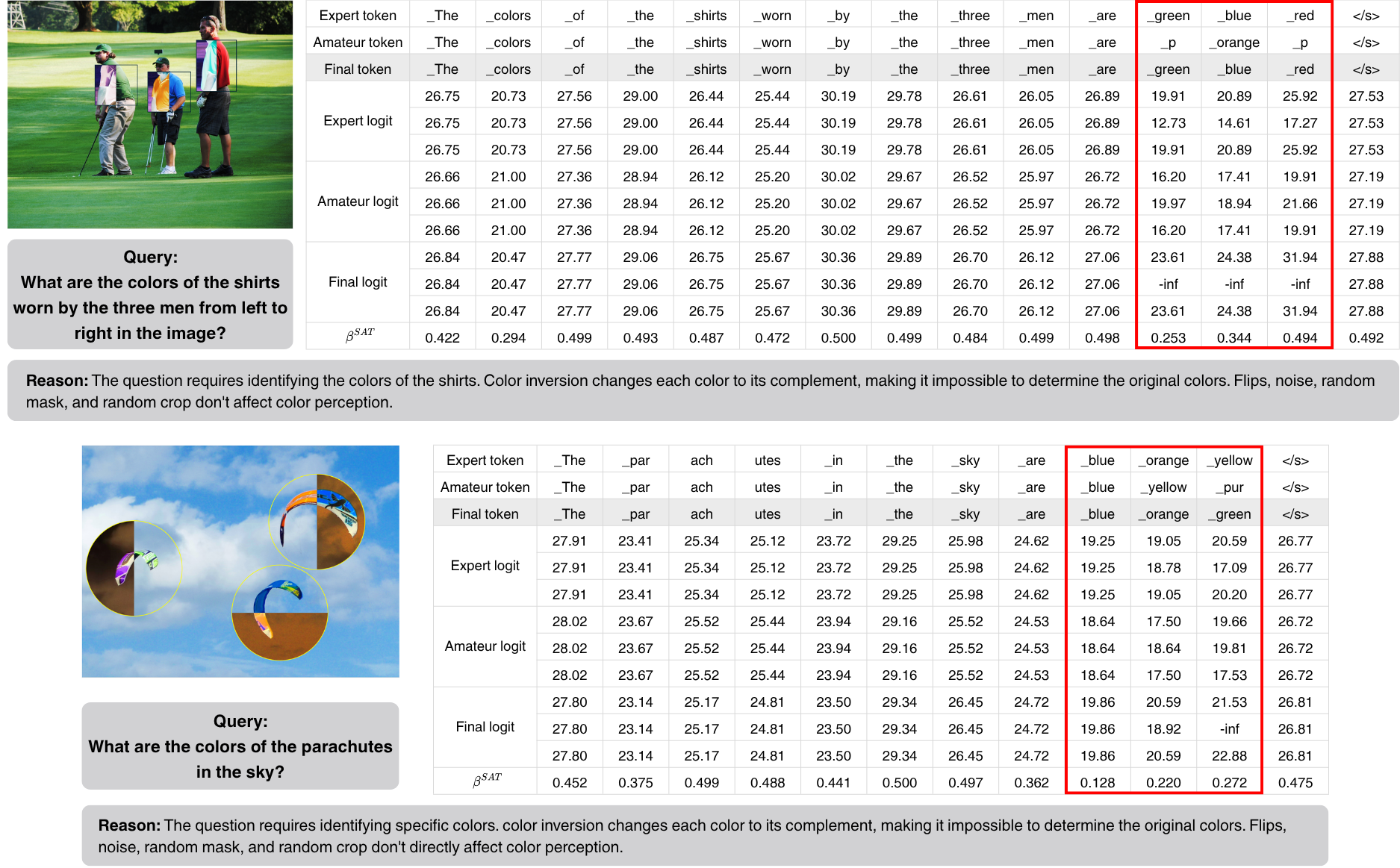

SAVCD examples on MMVP.

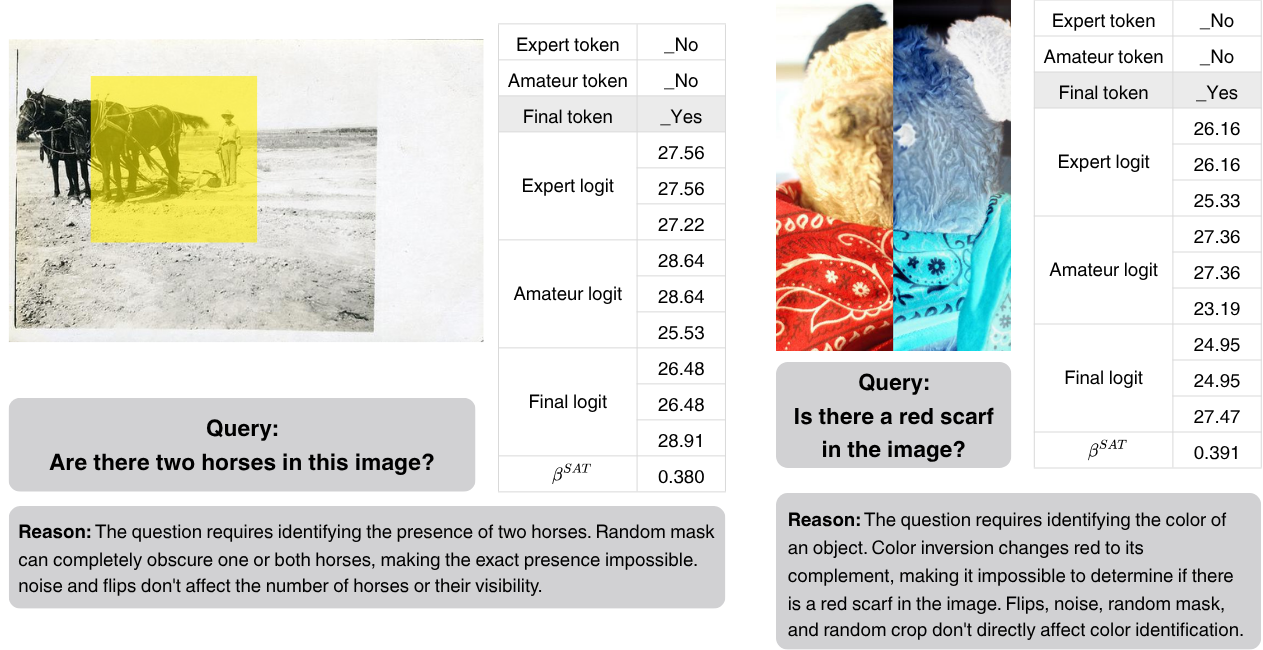

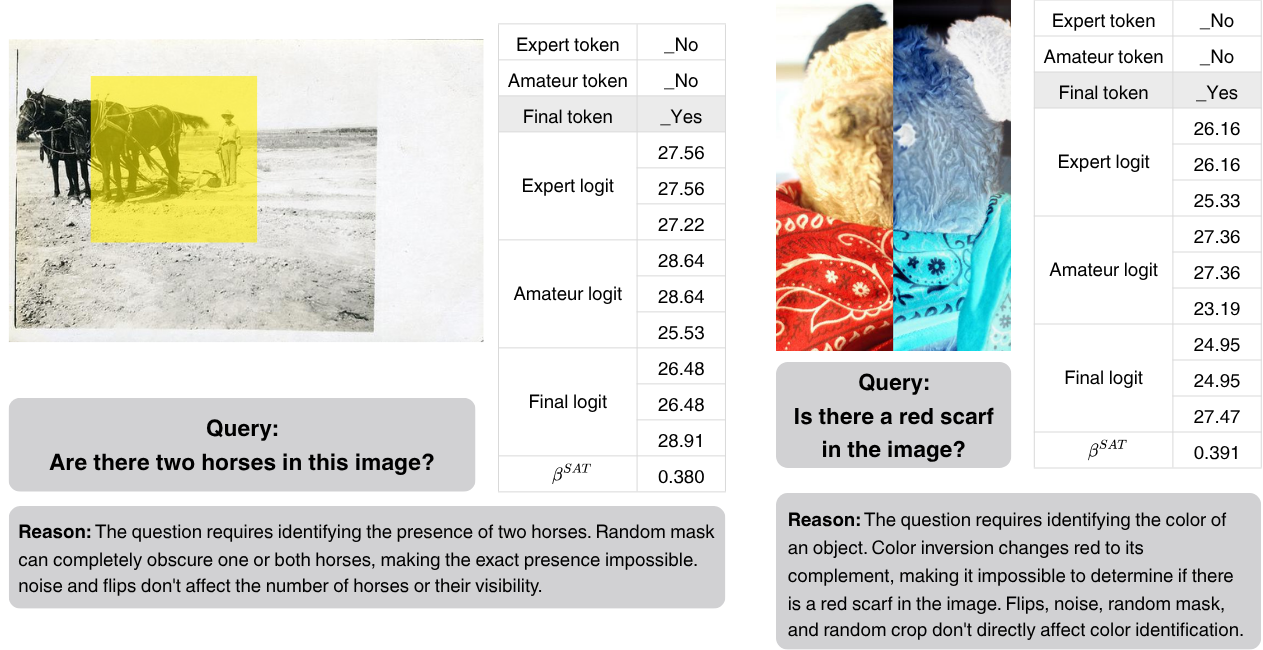

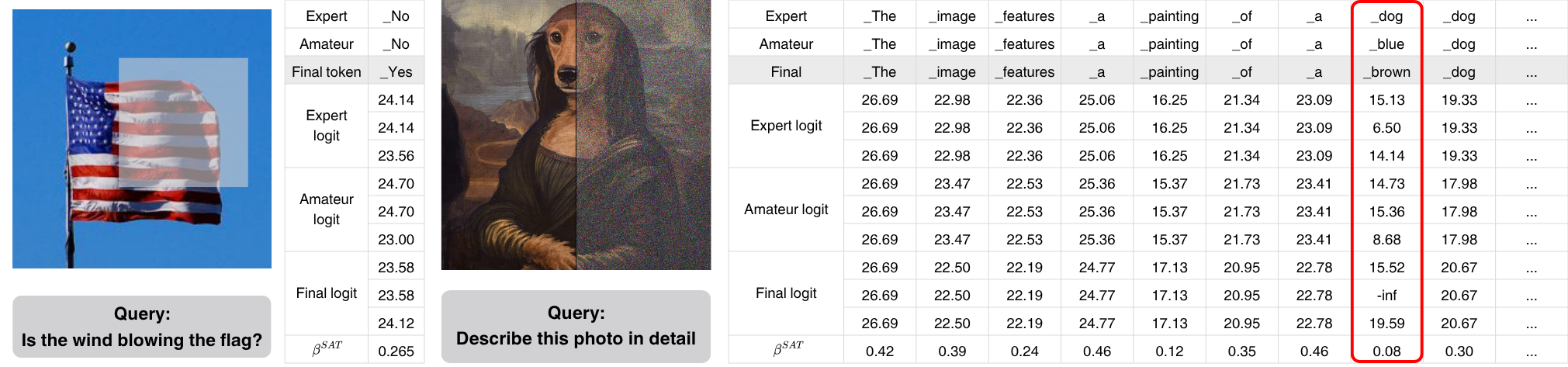

SAVCD examples on MME.

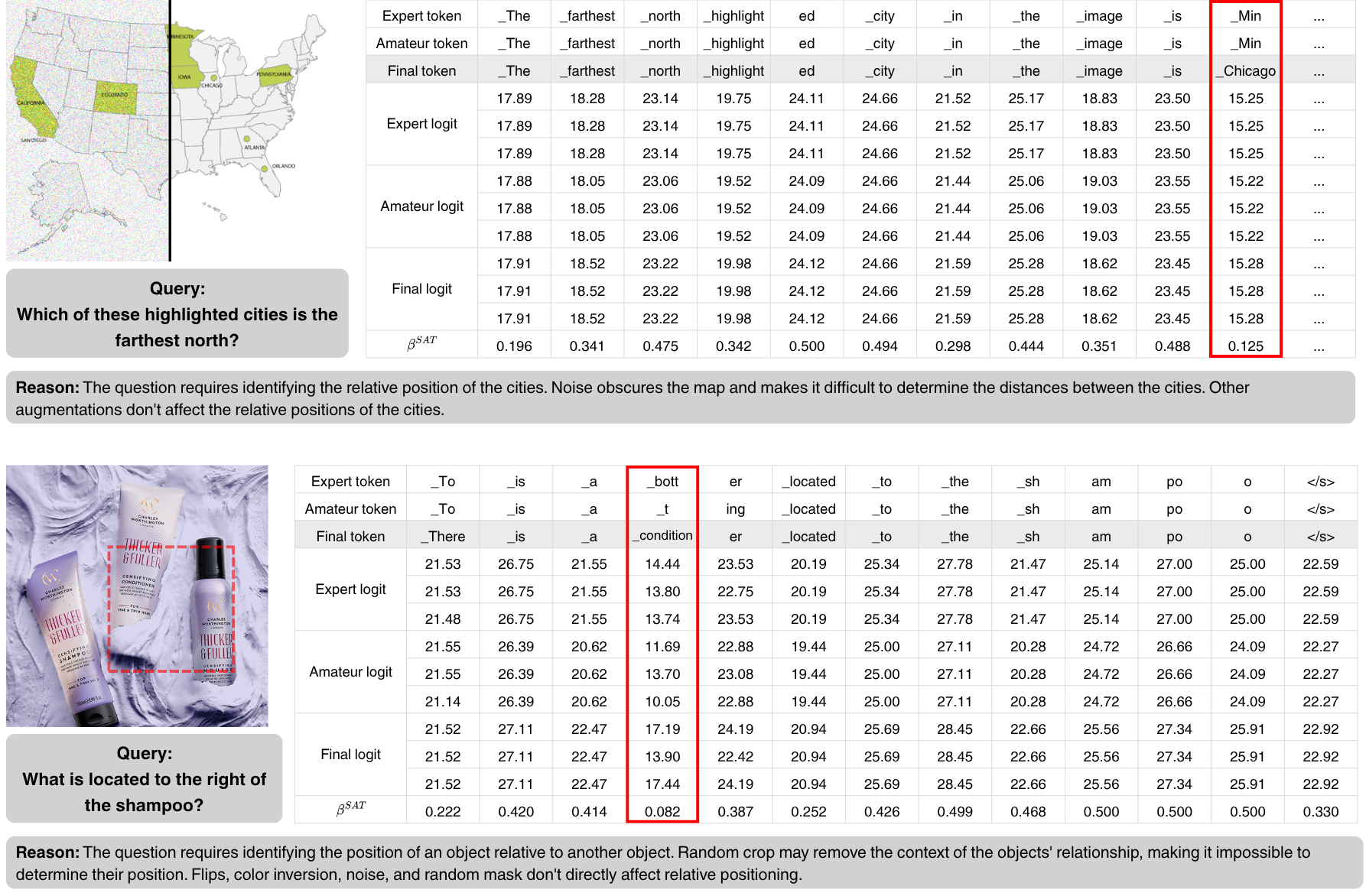

SAVCD examples on MM-Vet.

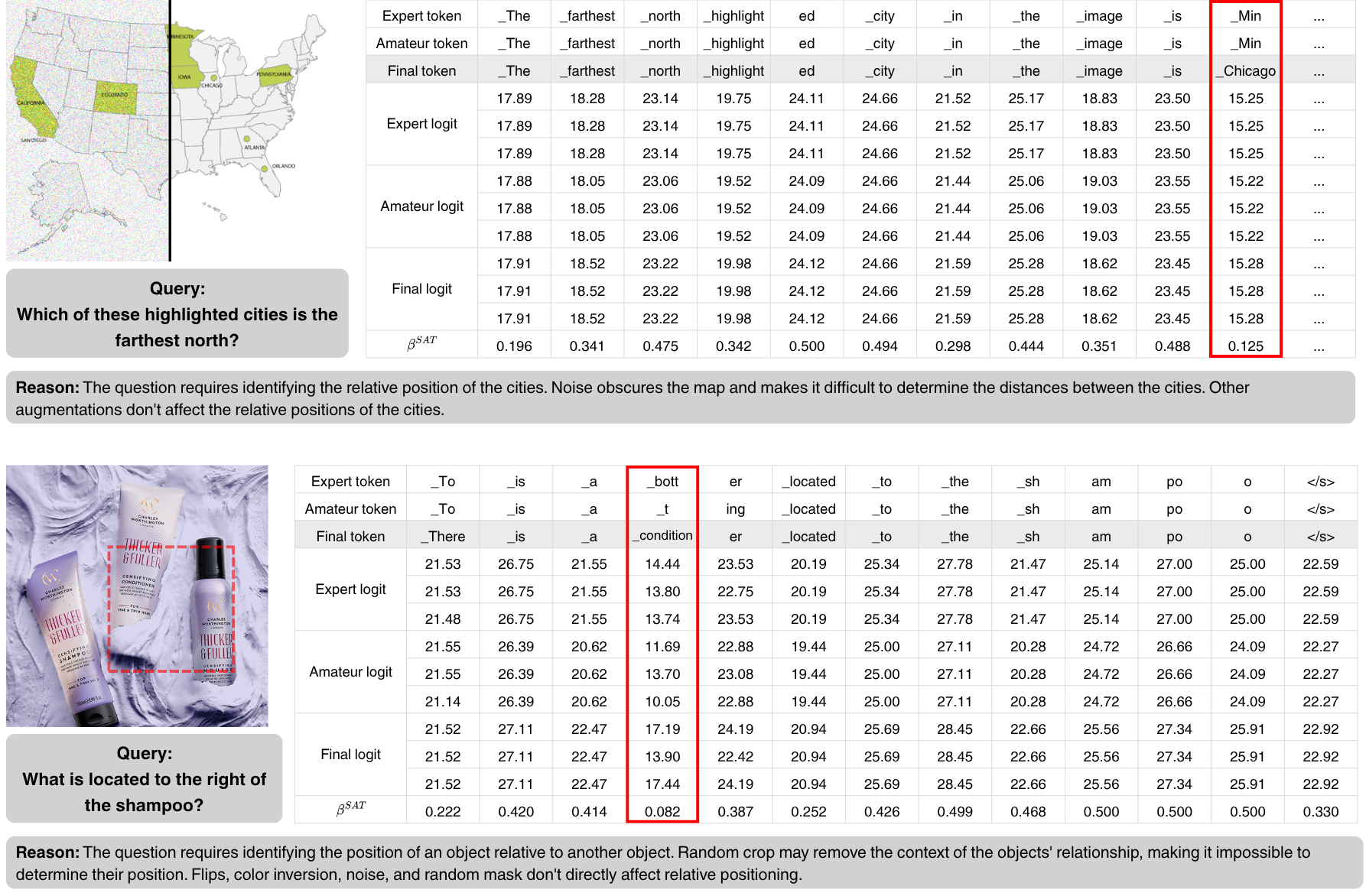

SAVCD examples on MMHal-Bench.

Token level analysis.

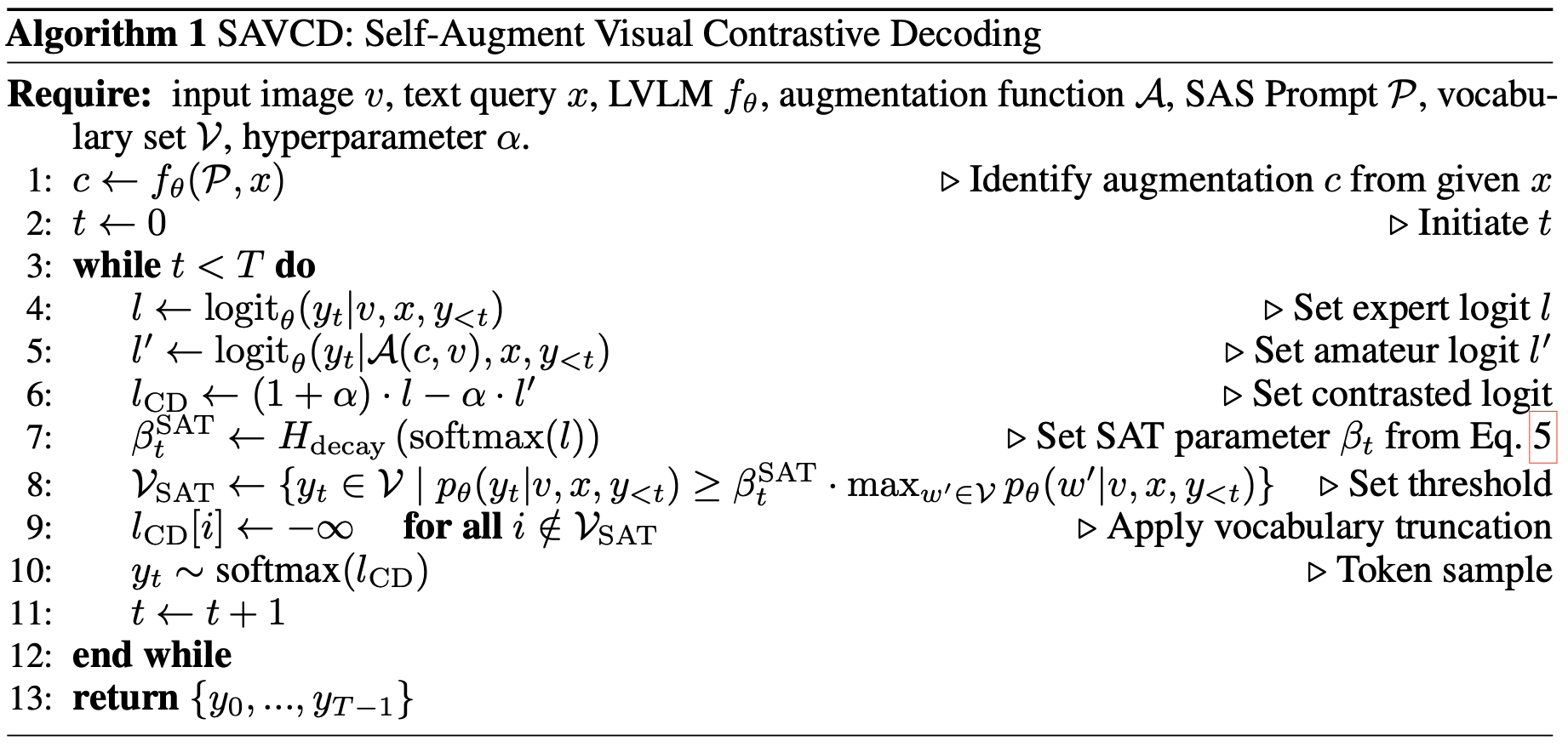

Large Vision-Language Models (LVLMs) have demonstrated remarkable multimodal capabilities, but they inherit the tendency to hallucinate from their underlying language models. While visual contrastive decoding has been proposed to mitigate this issue, existing methods often apply generic visual augmentations that disregard the specific context provided by the text query, limiting their effectiveness. This study introduces a novel training-free decoding strategy that addresses these limitations, featuring two key contributions. First, a self-augmentation prompting strategy that leverages the intrinsic knowledge of the model to dynamically align semantics between the query and the visual augmentation. Second, an adaptive thresholding algorithm that adaptively adjusts next token candidate size based on the output sparsity, utilizing full information from the logit distribution. Extensive experiments across four LVLMs and seven benchmarks demonstrate that the proposed decoding significantly enhances factual consistency compared to state-of-the-art decoding methods. This work highlights the importance of integrating query-dependent augmentation and entropy-aware decoding for improving effective generation of LVLMs.

To address the research questions, we present Self-Augmented

Visual Contrastive Decoding (SAVCD), a

confidence-aware decoding method that achieves

semantic alignment of query and visual augmentation. The

proposed method integrates seamlessly into any LVLM without

requiring any architectural modifications or additional training.

SAVCD is composed of two following components.

Self-Augmentation Selection (SAS):

SAS aims to employ parametric knowledge of the LVLM to dynamically

select a task-optimal visual augmentation on the fly that

amplifies the output divergence.

SAS Prompt involves: (1) explicit definitions of each visual

augmentation and corresponding effects for injecting operational

knowledge, (2) structured reasoning instructions to minimize the

risk of post hoc rationalization, (3) in-context examples for

providing contextual knowledge.

Sparsity-Adaptive Truncation (SAT):

SAT mitigates false positive generation by leverages the principle

that a sparsity is inversely related to its uncertainty. Lenient

threshold is required in high-entropic scenarios, and restrictive

threshold is favored in low-entropic cases.

We set βSAT with a decayed entropy function based on Shannon

Entropy and sigmoid. In that a value in the logit is relative to

the others, the inverse-entropic principle ensures the

confidence-awareness.

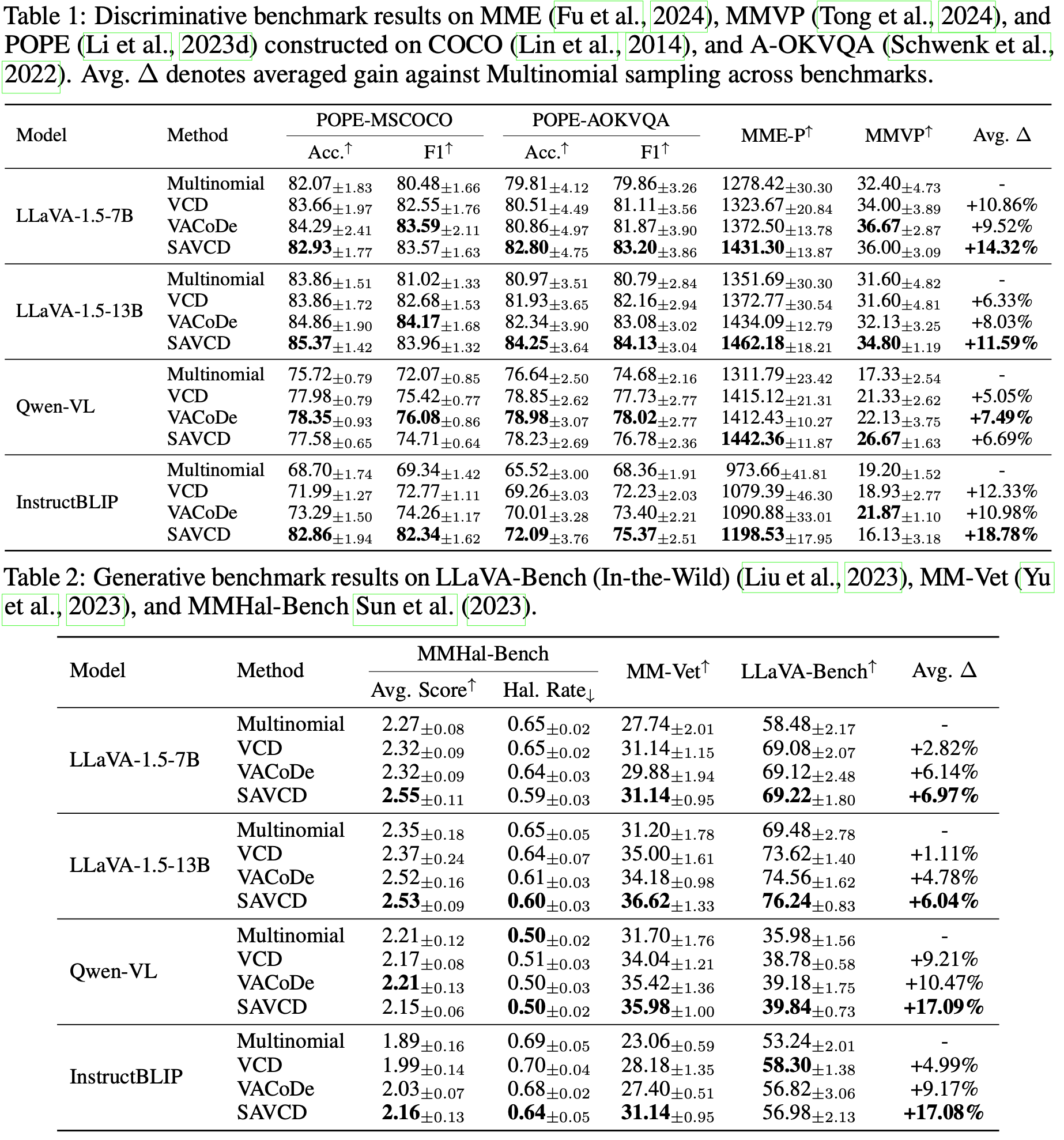

Discriminative benchmarks assess the factuality of visual recognition in the form of binary or multiple choice questions, while generative benchmarks evaluate broader capabilities by requiring open-ended responses. SAVCD achieves remarkable performance gains across both benchmark categories, ranging from 6.69% to 18.78% relative to the multinomial sampling.

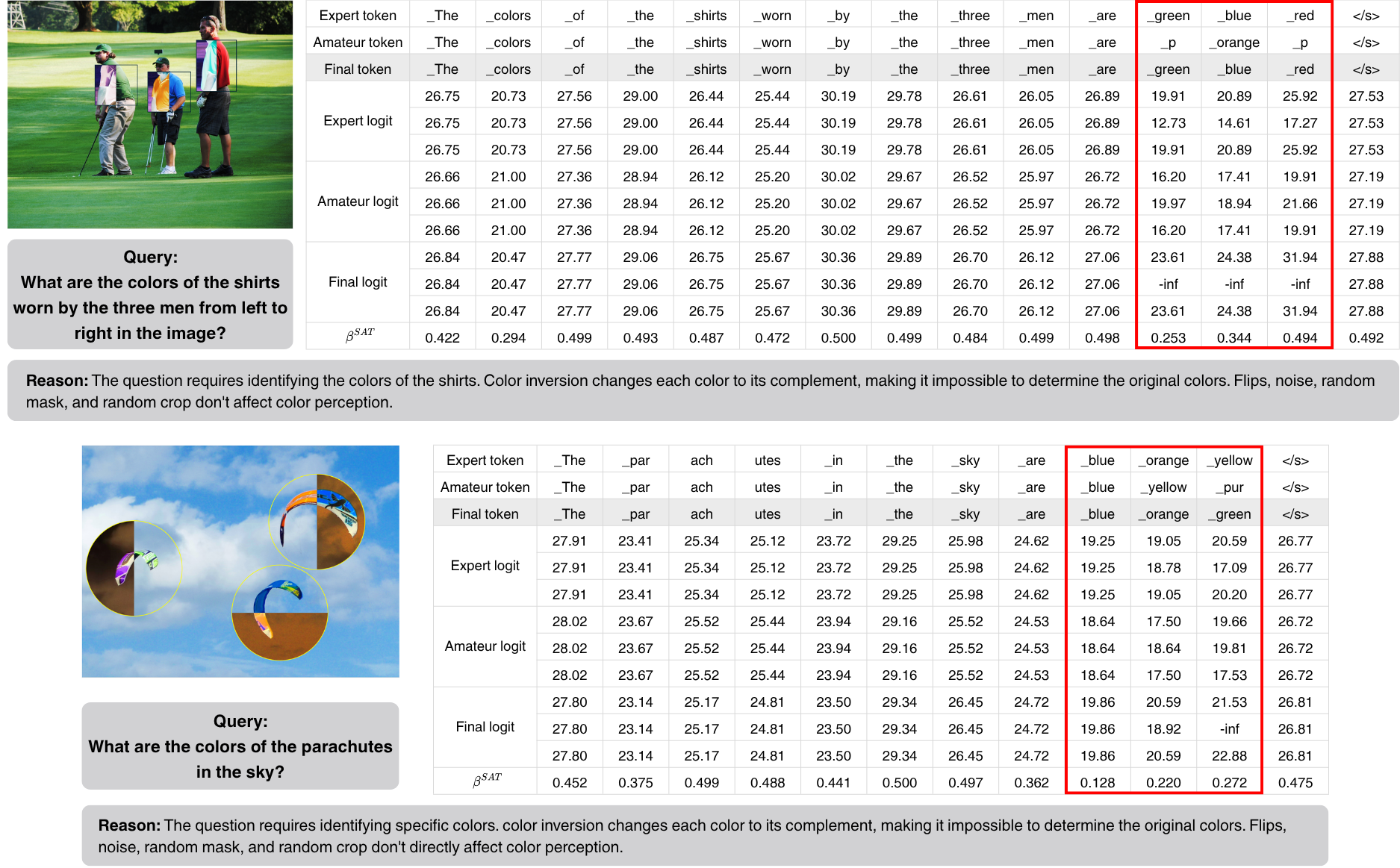

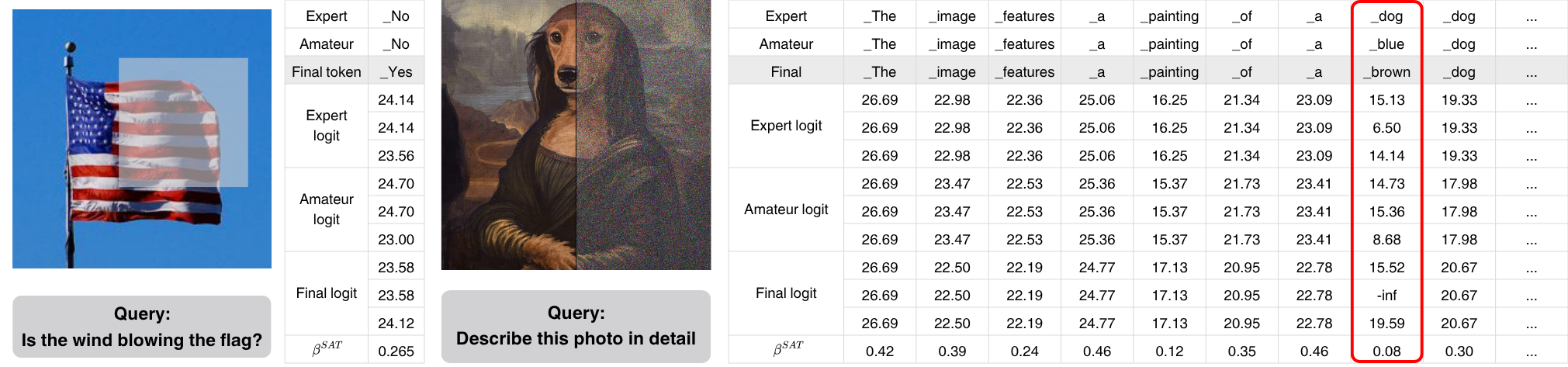

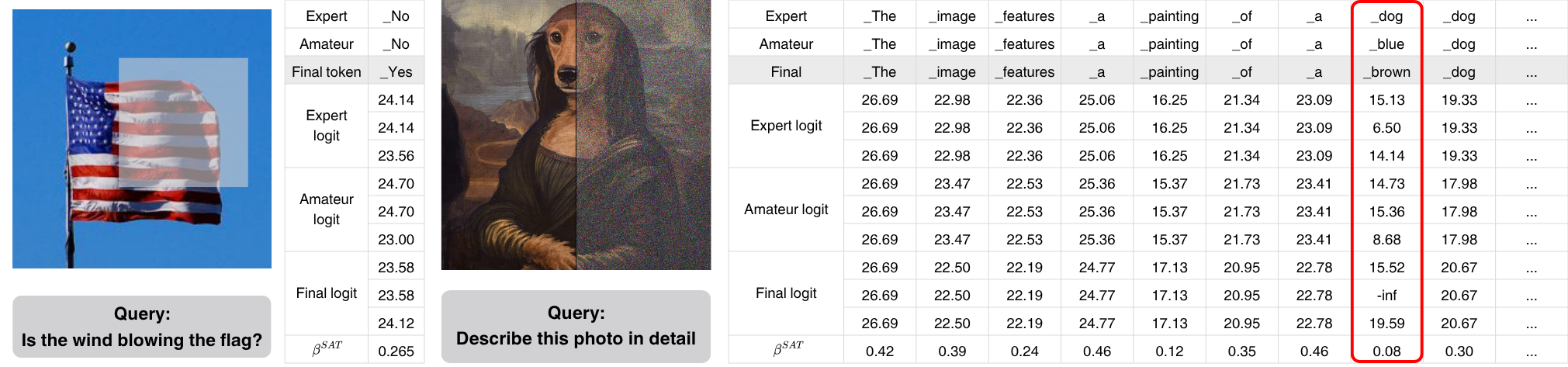

How does SAVCD actually work? Token-level study provide demonstration of the functionality, including 3 key observations. (1) The example to the left shows a case of failure correction where the contrastive process between two logits successfully elevates the score for the correct Yes token, making it the final answer. (2) The example on the right evidences hallucination penalty, where random noise triggered hallucination of generating blue token from the amateur logit. It is penalized through subtraction, causing its final score to fall below the SAT threshold and be removed from the candidate set. (3) Adaptive nature of the SAT threshold βSAT is observed, with a higher threshold applied to common tokens (e.g., articles, prepositions) and a lower threshold applied to informative, lower-confidence tokens (e.g., painting, red-boxed token). These findings highlight a clear validation of both core components of SAVCD, confirming that not only contextually relevant augmentation selection with model knowledge can effectively amplify the output divergence by invalidating the premise of the question, but also the efficacy of confidence-aware SAT.

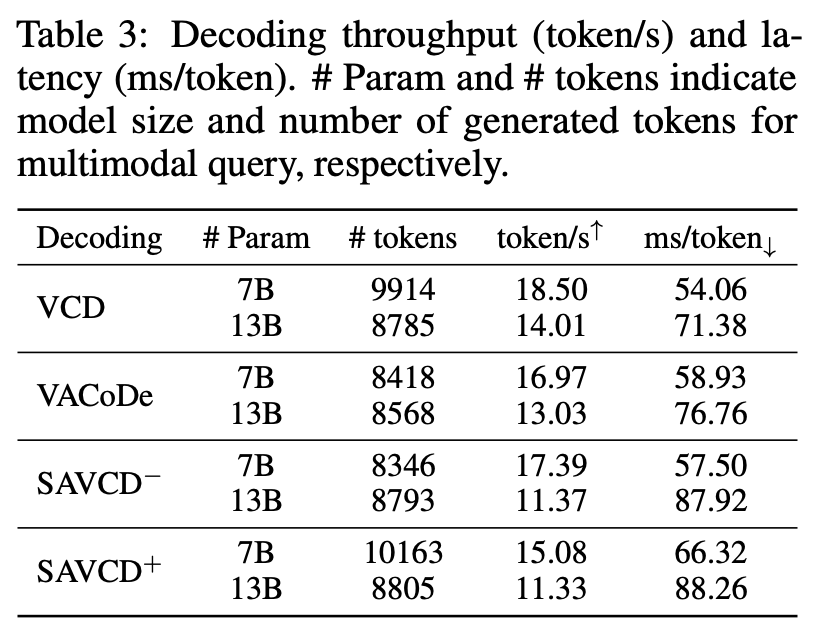

We compare computational complexity of SAVCD against query-static method (VCD) and another query-specific method (VACoDe). Superscript + and - strong prompting and efficient prompting. While VACoDe searches the optimal augmentation in a brute-force manner, SAVCD demonstrates architectural advantage by requesting a single text-only generation pass, bypassing the main computational expenses of visual tokens, which constitute the majority of the input. This architectural feature enables a flexible trade-off between performance and latency. While the full prompting incurs a cost comparable to VACoDe, the cost-optimized prompting exhibits substantially higher efficiency.

SAVCD examples on MMVP.

SAVCD examples on MME.

SAVCD examples on MM-Vet.

SAVCD examples on MMHal-Bench.

Token level analysis.

@article{im2025self,

title={Self-Augmented Visual Contrastive Decoding},

author={Im, Eun Woo and Ali, Muhammad Kashif and Gupta, Vivek},

journal={arXiv preprint arXiv:2510.13315},

year={2025}

}